Language-based AI Agents and Large Action Models (LAMs)

I am a speaker at the CVPR 2024 Tutorial on Generalist Agent AI. My talk describes benchmarks, algorithms and architectures related to Language-based AI Agents. Here are my slides.

Language-based AI Agents are a new generation of assistants capable of performing actions specified by a user in natural language. These actions may take place in the digital world, the physical world, or a combination thereof. For example, I may ask this agent to “Place an online order for a new pair of black running shoes for me”.

My talk outlines a series of Language-based Agent architectures that range from simpler to complex: (1) Prompt-template based Agents; (2) Learnable prompt Agents; (3) Large Action Models (LAMs); (4) Multi-agent orchestration. At Salesforce AI Research, our team has developed a full ecosystem of open source tools and implementations to study the problem of Language-based AI Agents. Our ecosystem covers the full range of Agent architectures.

Salesforce AI Research Open Source Ecosystem for AI Agents

-

Prompt-template Agents: These are LLM-based agents that rely on architectural designs and templated prompts to achieve action execution. Generally, there are no trainable components within the agent architecture. Instead, they are used in a zero-shot setting or in a few-shot manner with in-context learning. We evaluated a variety of prompt-template agent architectures and LLM combinations in our recent BOLAA [1] paper. Here’s the code. We’ve also proposed a new agent architecture that uses Monte-Carlo Tree Search (a la AlphaGo) to improve agent effectiveness in our REX [2] paper.

-

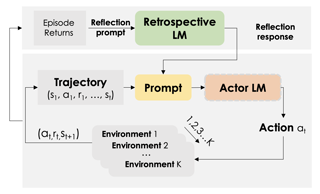

Learnable-prompt Agents: Taking one step forward, we can make part of the template formation learnable. In our recent Retroformer [3] paper, we looked at training a retrospective module, which automatically tunes the language agent prompts from environment feedback through policy gradient. Our paper shows that this process makes the frozen LLM more effective in performing actions. We released the code here.

-

Large Action Models (LAMs): While the previous strategies can be effective when limited data is available, we can take a different approach when we have access to example pairs of user task inputs and task execution in the form of action steps. In this case, we can afford to further train the LLM into a Large Action Model (LAM). We can think of a LAM as being an LLM specifically trained to execute actions from data. The first key ingredient of course is the data itself. In our paper AgentOhana [4], we developed a unified data pipeline and data format that aggregates action trajectories from a multitude of environments. As a result, we are able to train our xLAM model and show how it can achieve state-of-the-art performances in multiple evaluation benchmarks. We’ve released the data pipeline and xLAM models.

-

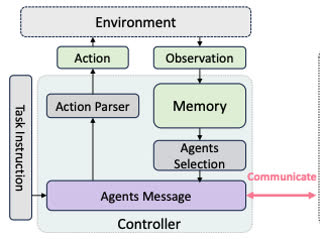

Multi-Agent Orchestration: The three solo agent architectures above can be quite effective in various application domains. However, there may be situations in which the tasks are so complex that they require deep specialization in a very wide range of domains. In that case, it may be desirable to modularize the architecture into multiple specialized agents that are coordinated by an orchestrating mechanism in a central controller. Our AgentLite library simplifies the implementation of new agent/multi-agent architectures, enabling easy orchestration of multiple agents through a manager agent. In our BOLAA [1] paper, we designed a multi-agent architecture that outpeforms solo-agent approaches.

The road ahead for AI Agents and LAMs

I’m interested in several areas of research around AI Agents and LAMs. In no particular order:

- Multimodal AI Agents: we are looking at adding other sensory modalities to the agent framework, both to enable user-agent communication in a multimodal fashion, but also to enable the agent to interact with multimodal environments.

- Efficient learning and Generalization: Learning still requires a significant amount of data, so data-efficient methods are atractive. Also of interest is the idea of generalization to more domains and enviroments, so that transfer from one domain to another could be done efficiently.

- Task complexity and agent specialization: our multi-agent experience indicates that orchestrating multiple specialized agents can achieve better performances compared to a monolithic agent approach, partcularly when task complexity increases. Understanding how deep the agent specialization can go in a single domain versus the multi-agent decomposition can be beneficial for more efficient implementations.

- More advanced benchmarking to help pushing capabilities forward: We are in the early stages of agent benchmarking and it is very important to continue creating more detailed evaluation frameworks, with enviroments that support more complex tasks. This will be critical to facilitate the development of more capable AI Agent algorithms.

References

Enjoy Reading This Article?

Here are some more articles you might like to read next: