Level up your Agents: Teaching Vision-Language Models to Play by the Rules

Large language models (LLMs) have shown incredible promise in creating intelligent agents that can understand and achieve our goals in interactive digital worlds. Examples include Language-based Agents and Large Action Models [1] [2], which leverage the extensive knowledge and reasoning abilities of LLMs with learning methods that guide them toward specific agentic goals and objectives, moving beyond simple text continuation. Now, imagine giving these agents eyes! That’s where vision-language models (VLMs) come in, extending the power of LLMs to process visual information. This opens up exciting new possibilities, enabling tasks that demand visual understanding, such as enhancing robotic control or automating tasks on your computer by “seeing” and interacting with the screen.

However, there’s a catch. While VLMs are great at answering general questions about images, they sometimes struggle with the nitty-gritty details of specific agent tasks. This is contrast with LLMs, as they excel at tasks requiring structured outputs, like function calling and adhering to specific syntax; while VLMs often lag in these critical areas. Think of it like this: a VLM might be able to describe a button on a screen perfectly, but it might not know the exact, specific command needed to click that button within a particular application. They tend to be more focused on open-ended understanding rather than adhering to strict output rules that many environments require.

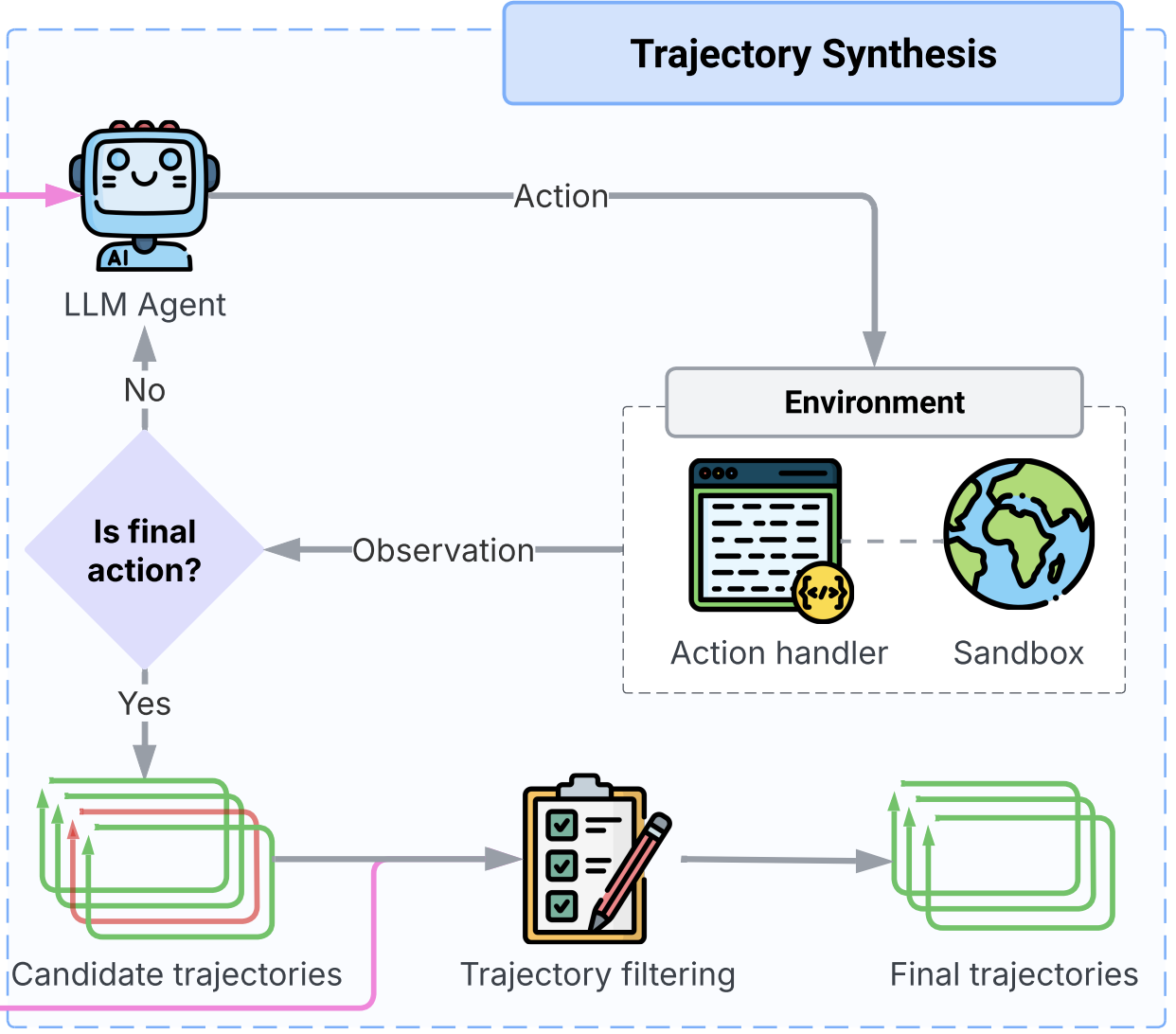

The traditional way to overcome this limitation is through supervised fine-tuning (SFT), where we feed the VLM a bunch of examples of experts successfully completing the task. Although somewhat effective, SFT has an inherent limitation: it cannot surpass the performance of the best strategy within its training data, and when these datasets are imperfect or contain suboptimal decisions, SFT simply replicates these flaws. Furthermore, what if we don’t have a ton of perfect examples? Or what if our initial VLM isn’t very good at the task to begin with?

That’s where an alternative learning approach comes into play: reinforcement learning (RL). Instead of just learning from perfect examples, RL allows the VLM to learn through trial and error. It can learn from its own mistakes and even learn from the successes (and failures!) of more capable models.

Overcoming Limitations with Reinforcement Learning

Our recent paper [3] explores an “offline-to-online” RL technique that offers a stable and straightforward way to fine-tune VLMs for agent tasks. It’s similar to the familiar SFT process but with a crucial upgrade: the agent can now learn and improve on its own, even when the initial training data isn’t the best. This means we can take an open-weight VLM (a model whose code and weights are publicly available) and teach it the specific skills needed to excel in interactive, multi-modal environments.

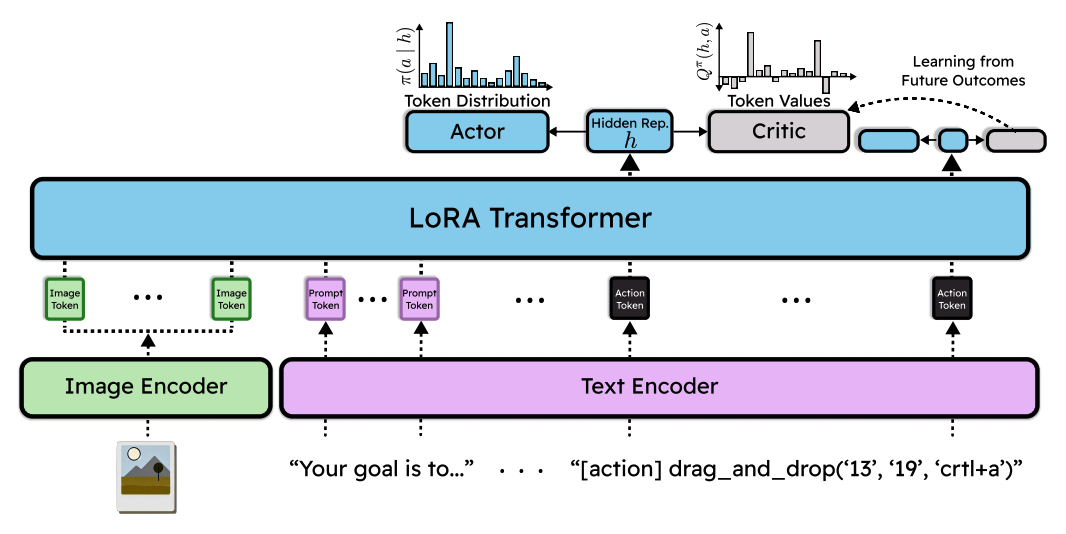

Our approach is a form of VLM Q-learning that we name Advantage-Filtered Supervised Fine-Tuning (AFSFT). AFSFT fine-tunes VLMs for agent tasks using a reinforcement learning (RL) perspective. RL trains a model to optimize its performance within an environment by learning from the consequences (rewards) of its actions. While RL can introduce complexity, our work adopts an off-policy RL solution, preserving the stability and simplicity of the widely used SFT workflow.

The central idea behind AFSFT is to enable the agent to improve beyond its initial dataset by selectively filtering out tokens from generated responses that are estimated to degrade performance. This filtering mechanism is implemented by framing VLM agent fine-tuning as an off-policy actor-critic RL problem.

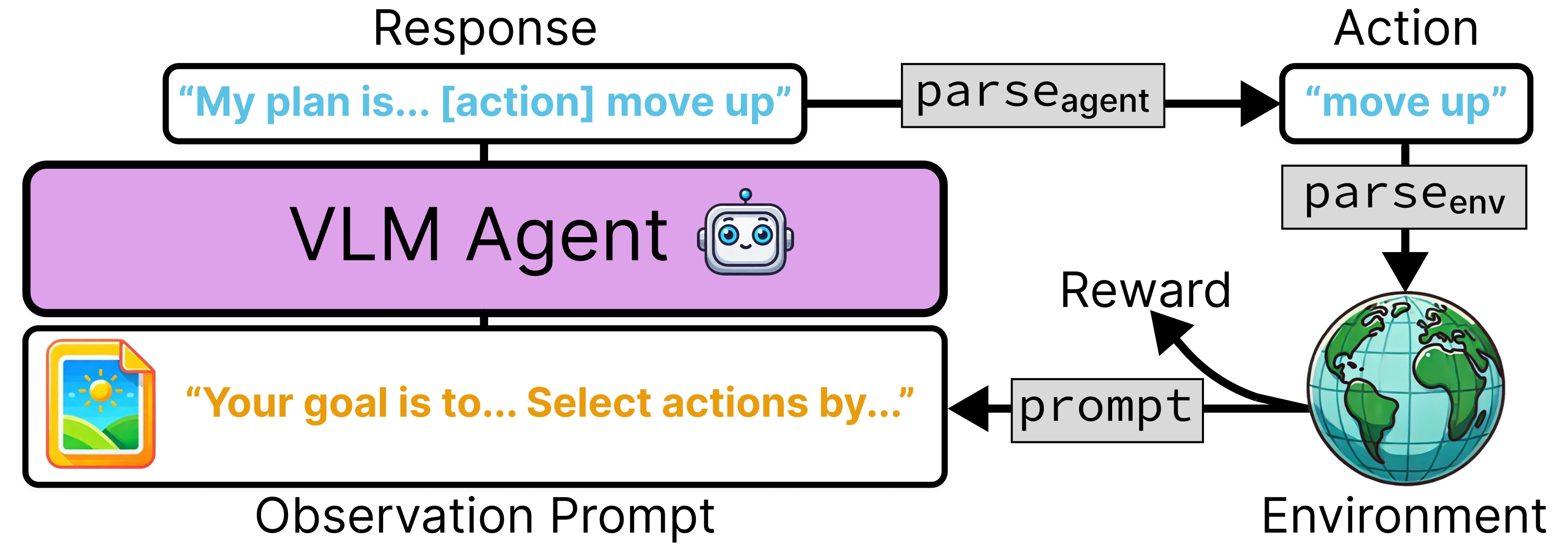

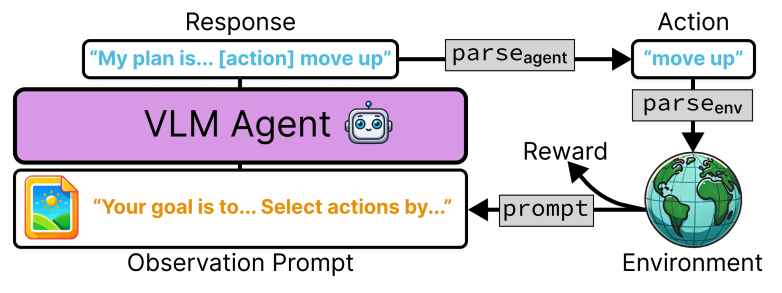

In this setting, the VLM acts as the policy (or actor), mapping text and image observations to text actions. A second output head, known as the critic, is integrated into the VLM architecture. The critic estimates the future return (value) associated with selecting each possible token in the vocabulary. This critic then guides the language modeling head (the actor), effectively filtering its output.

The agent interacts with the environment as follows: the environment provides text and image data, which are formatted into an input prompt for the VLM. The VLM generates a text response, which is parsed into a concrete action executed by the environment. The environment then provides a reward and the next observation. The method formalizes this interactive process as a token-based RL problem, where the VLM’s output sequence is treated as a series of sequential actions.

Tackling the Syntax Barrier and Suboptimal Data

A key contribution of AFSFT is its ability to address the VLM’s difficulty in producing the strict action syntax required by many environments. This issue is closely related to the “tool-use” or “function-calling” capabilities of LLMs and LAMs, where larger models typically exhibit superior performance. Base VLMs often struggle to generate valid outputs, even when they possess a conceptual understanding of the task, creating a significant barrier to successful interaction and effective reward collection.

AFSFT overcomes this challenge by learning from datasets that may contain numerous invalid or suboptimal actions. The token-based learning format allows the agent to acquire specific syntax details from demonstrations, even if those demonstrations reflect poor overall decision-making. The learned critic plays a crucial role in filtering out tokens that would lead to invalid syntax or suboptimal semantic choices. Furthermore, the method incorporates penalties for invalid syntax, explicitly guiding the VLM to adhere to the required format.

From Offline Data to Online Interaction

The AFSFT approach is particularly well-suited for an offline-to-online fine-tuning process. It can effectively learn from static datasets, including those generated by basic prompting or even random valid actions. This capability helps the agent overcome the action syntax challenge early in the training process. Unlike pure SFT, AFSFT’s filtering mechanism enables it to surpass the quality of this initial, potentially limited, dataset.

Importantly, AFSFT facilitates a smooth transition to online learning. Once the agent can interact with the environment, it can gather new data from its own experience. This self-generated data can then be used to further refine its policy through additional training. This iterative self-improvement is a significant advantage over methods restricted to static datasets.

Experimental Validation

We put this technique to the test using three open-weight VLMs: PaliGemma, xGen-MM, and MoonDream2; across three challenging visual agent domains: Gym Cards, BabyAI, and MiniWoB.

Initial experiments revealed that prompting alone resulted in surprisingly low valid action syntax accuracy and task success rates for these smaller VLMs. Even when prompted to use simple formats like “[action] CHOICE”. In contrast, larger LLMs, such as xLAM, designed for tool use, demonstrated higher syntax accuracy when provided with text descriptions of the visual input.

However, after fine-tuning with AFSFT, the VLMs, especially xGen-MM and MoonDream2, showed substantial improvements in both valid action syntax accuracy and task success rate. On Gym Cards tasks, their performance reached levels comparable to or exceeding reference scores from other RL methods, even when starting from datasets of random or inaccurate actions. In MiniWoB browser tasks, AFSFT enabled MoonDream2 to learn from a noisy dataset (partially filled with random partial actions) and achieve a performance level significantly better than the base model and even standard SFT on the same data. Online learning experiments in BabyAI also demonstrated AFSFT’s ability to leverage exploration to improve syntax accuracy and task success, approaching near-perfect performance.

Overall, our results are promising, showing that this RL approach can effectively align VLMs with the specific demands of interactive decision-making.

Conclusion

So, what does this mean for the future? It suggests a powerful new pathway for developing more capable and adaptable visual agents using readily available VLM technology. By allowing these models to learn and self-improve through reinforcement learning, we can unlock their full potential for a wide range of interactive applications. Stay tuned for more exciting developments in this area!

References

Enjoy Reading This Article?

Here are some more articles you might like to read next: