AI Agents: From Language to Multimodal Reasoning

AI Agents are rapidly evolving from language-only models to sophisticated multimodal reasoners. This post outlines our research journey in advancing AI Agents over the past year, accompanying my presentation at the ICCV 2025 Workshop on Multi-Modal Reasoning for Agentic Intelligence.

My talk follows up on my CVPR 2024 Tutorial talk on Language-based Agents and Large Action Models. Here are my slides. This accompanying post outlines the content of my talk and consolidates all relevant resources.

From Language-Only to Multimodality

Our journey began with building top-performing Language-only Agents. The core of our research focuses on training Large Action Models (LAMs) to serve as the agent’s ‘brain’. We found two key ingredients to achieving this: a data pipeline that unifies expert trajectories from diverse sources and a finetuning pipeline that leverages supervised-finetuning (SFT), LoRA, and DPO.

In our recent xLAM [1] paper, we demonstrated how to effectively achieve this, leading xLAM to become the Top performing model in the Berkeley Function Calling Leaderboard v2.

Building on this success, we then explored how these core training principles apply to Multimodal AI Agents. Our LATTE paper [2] shows that we can train highly effective Multimodal AI Agents for Visual Reasoning by collecting high-quality agent trajectories and fine-tuning a pre-trained VLM with this agentic data.

Exploring Self-Improvement via Simulation

These are indeed exciting results. However, a key limitation of both approaches is the reliance on carefully curated expert trajectories for training the agent’s ‘brain’. This poses two significant challenges: it requires extensive labor for data collection/curation and, critically, the model’s performance is ultimately capped by the ‘expert’ input quality.

This motivated us to explore more advanced learning mechanisms, in particular, that of automatic exploration in simulation to allow the agent to discover new problem-solving strategies.

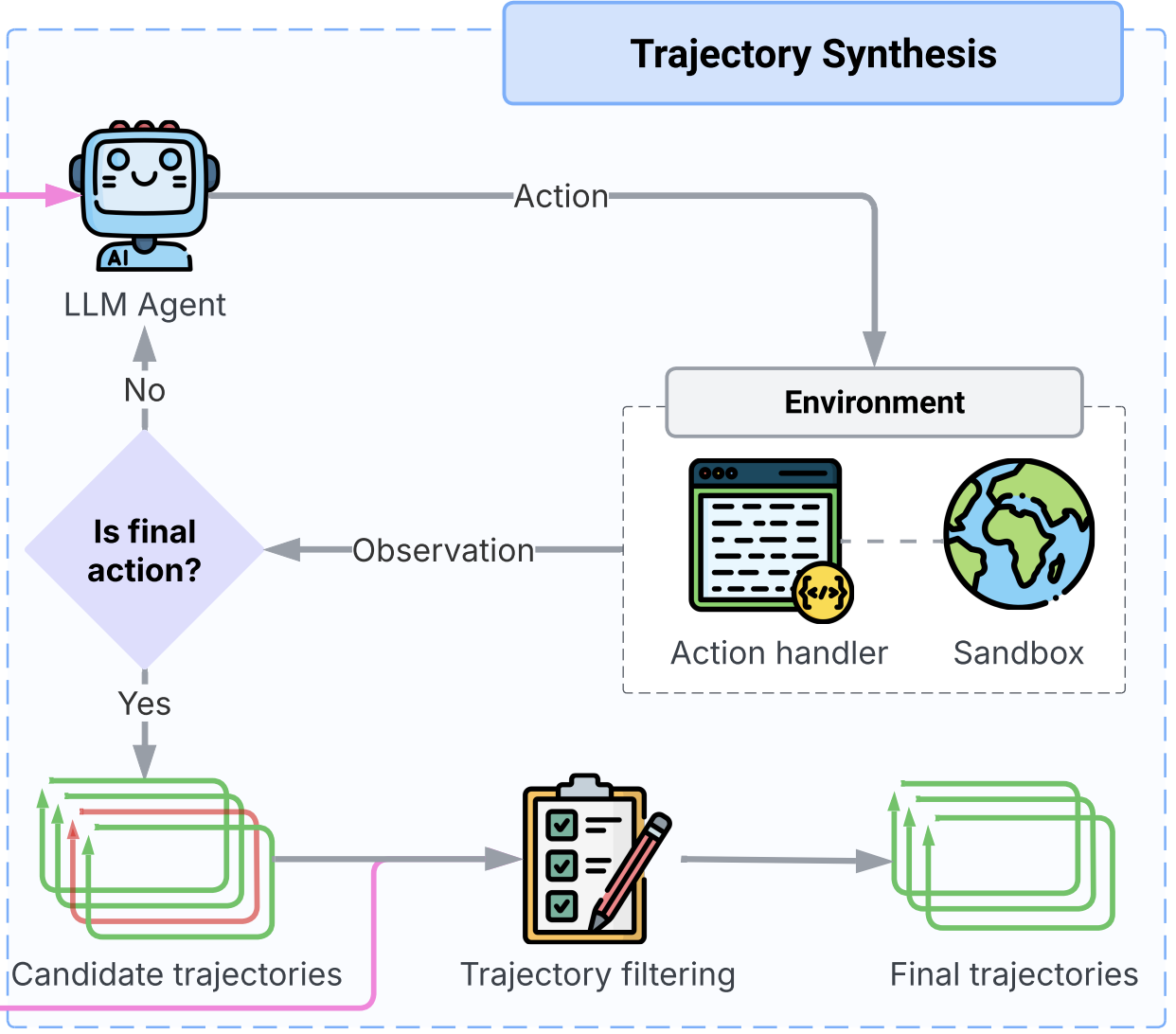

We study this in our LAM Simulator paper [3]. We put a language-based agent into an RL-style learning loop, automatically generating trajectories instead of relying on experts, and automatically grading them with Rewards using evaluation functions.

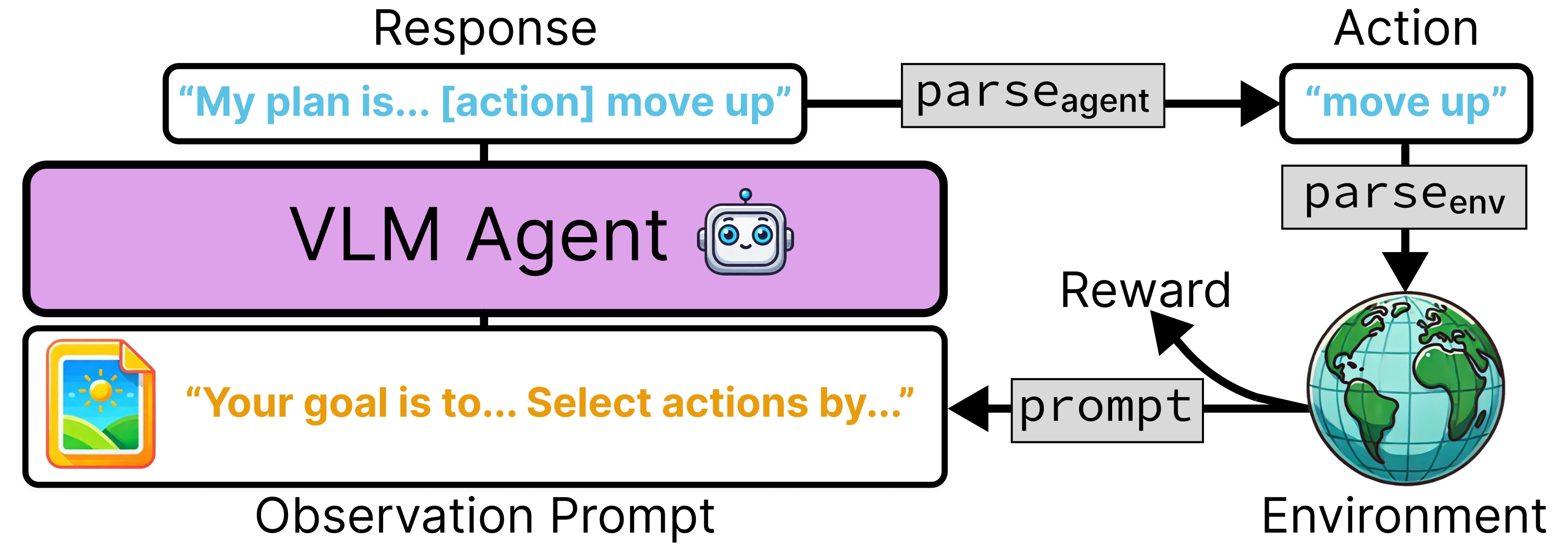

We then extended this idea of self-exploration and improvement to Multimodal AI Agents. In our ViUnit paper [4], we showed that visual programming agents can improve their robustness and performance by leveraging our automatically generated visual unit tests. Similarly, in a related work, we trained a VLM-based agent [5] with reinforcement learning and also saw substantial performance improvements compared to baseline VLM agents.

Conclusion

These are exciting times for AI Agents. While we’ve made incredible progress in transitioning agents from language to robust visual reasoning, the path forward is clear: enabling agents to operate with more modalities beyond Language and Vision, and developing more advanced, self-improving learning mechanisms. I look forward to continuing our work and seeing how quickly this space develops in the research community.

References

Enjoy Reading This Article?

Here are some more articles you might like to read next: